First workshop on Typology for Polyglot NLP

Co-located with ACL 2019

Program

Keynote Speakers

- Emily M. Bender’s slides: slides

- Jason Eisner’s slides: slides

- Balthasar Bickel’s slides: slides

- Sabine Stoll’s slides: slides

- Isabelle Augenstein’s slides: slides

Accepted Abstracts

CONTEXTUALIZATION OF MORPHOLOGICAL INFLECTION

Ekaterina Vylomova, Ryan Cotterell, Timothy Baldwin, Trevor Cohn and Jason Eisner

[poster]

CROSS-LINGUAL CCG INDUCTION: LEARNING CATEGORIAL GRAMMARS VIA PARALLEL CORPORA

Kilian Evang

[poster]

CROSS-LINGUISTIC ROBUSTNESS OF INFANT WORD SEGMENTATION ALGORITHMS: OVERSEGMENTING MORPHOLOGICALLY COMPLEX LANGUAGES

Georgia R. Loukatou

CROSS-LINGUISTIC SEMANTIC TAGSET FOR CASE RELATIONSHIPS

Ritesh Kumar, Bornini Lahiri and Atul Kr. Ojha

DISSECTING TREEBANKS TO UNCOVER TYPOLOGICAL TRENDS. A MULTILINGUAL COMPARATIVE APPROACH

Chiara Alzetta, Felice Dell’Orletta, Simonetta Montemagni and Giulia Venturi

[poster]

FEATURE COMPARISON ACROSS TYPOLOGICAL RESOURCES

Tifa de Almeida, Youyun Zhang, Kristen Howell and Emily M. Bender

POLYGLOT PARSING FOR ONE THOUSAND AND ONE LANGUAGES (AND THEN SOME)

Ali Basirat, Miryam de Lhoneux, Artur Kulmizev, Murathan Kurfalı, Joakim Nivre and Robert Östling

[poster]

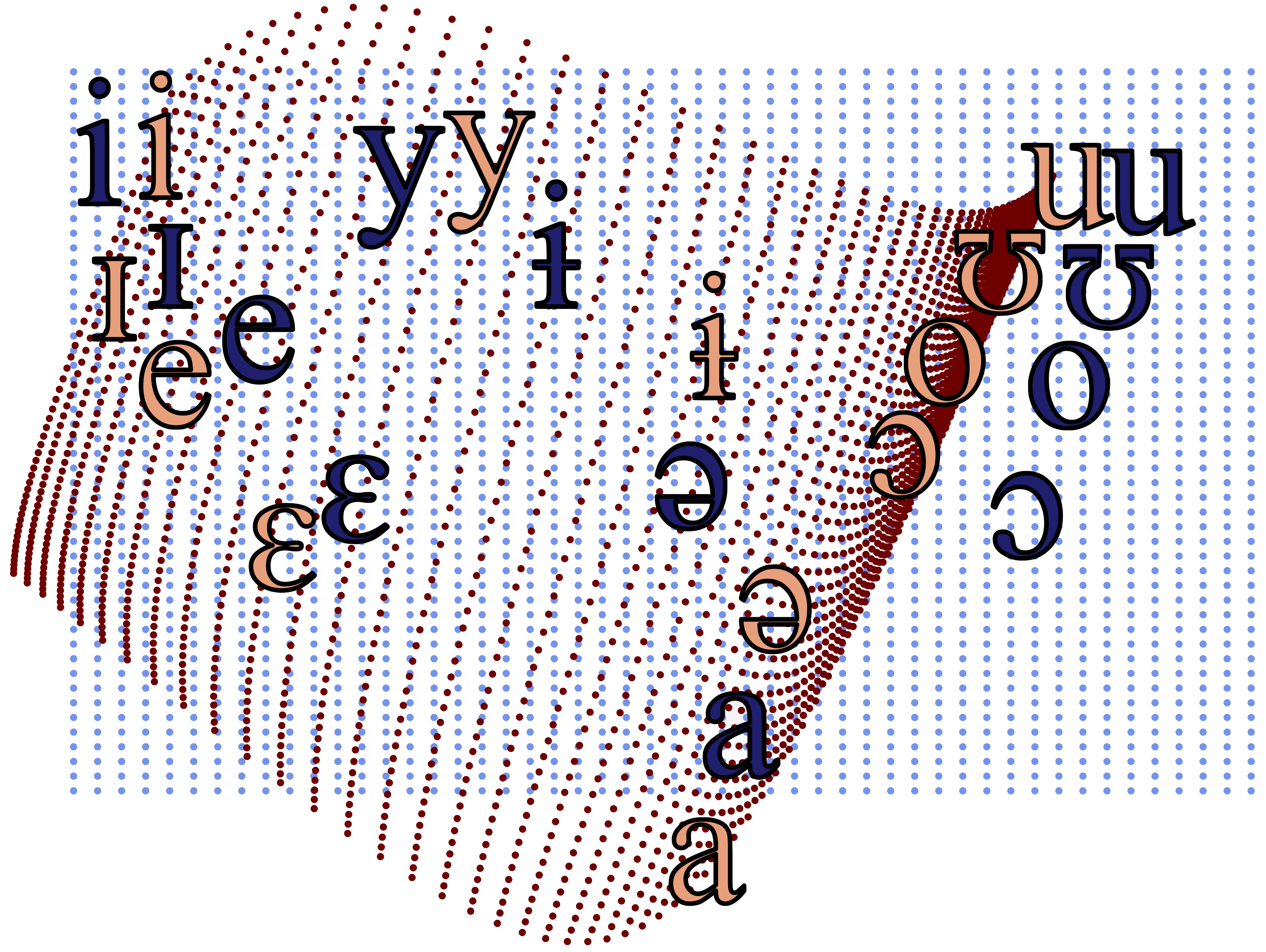

PREDICTING CONTINUOUS VOWEL SPACES IN THE WILDERNESS

Emily Ahn and David R. Mortensen

[poster]

SYNTACTIC TYPOLOGY FROM PLAIN TEXT USING LANGUAGE EMBEDDINGS

Taiqi He and Kenji Sagae

[poster]

TOWARDS A COMPUTATIONALLY RELEVANT TYPOLOGY FOR POLYGLOT/MULTILINGUAL NLP

Ada Wan

TOWARDS A MULTI-VIEW LANGUAGE REPRESENTATION: A SHARED SPACE OF DISCRETE AND CONTINUOUS LANGUAGE FEATURES

Arturo Oncevay, Barry Haddow and Alexandra Birch

[poster]

TRANSFER LEARNING FOR COGNATE IDENTIFICATION IN LOW-RESOURCE LANGUAGES

Eliel Soisalon-Soininen and Mark Granroth-Wilding

[poster]

TYPOLOGICAL FEATURE PREDICTION WITH MATRIX COMPLETION

Annebeth Buis and Mans Hulden

[poster]

UNSUPERVISED DOCUMENT CLASSIFICATION IN LOW-RESOURCE LANGUAGES FOR EMERGENCY SITUATIONS

Nidhi Vyas, Eduard Hovy and Dheeraj Rajagopal

USING TYPOLOGICAL INFORMATION IN WALS TO IMPROVE GRAMMAR INFERENCE

Youyun Zhang, Tifa de Almeida, Kristen Howell and Emily M. Bender

[poster]

WHAT DO MULTILINGUAL NEURAL MACHINE TRANSLATION MODELS LEARN ABOUT TYPOLOGY?

Ryokan Ri and Yoshimasa Tsuruoka

[poster]

Summary

A long-standing goal in Natural Language Processing (NLP) is the development of robust language technology applicable across the world’s languages. Until this goal is met, there will be limited global access to important applications such as Machine Translation or Information Retrieval. The main research challenge in multilingual NLP is to mitigate the serious bottleneck concerning the lack of annotated data for the majority of the world’s languages. Although we can approach this challenge via transfer of knowledge from resource-rich to resource-lean languages or via creation of models by joint learning from several languages, we are still far from accurate and efficient models applicable to any language of the world.

One of the main problems is the huge diversity of human languages. While languages can share universal features at a deep level, at the surface level their structures and categories vary significantly. This compromises the performance of language-agnostic NLP algorithms when applied on a large scale: their design, training, and hyperparameter tuning suffer from language-specific biases. For instance, the perplexities of word-level language models suffer particularly on languages with rich morphology because this information is disregarded. Moreover, the variation in syntactic trees, unless reduced, hinders the performance of structured encoders when applied cross-lingually on Natural Language Inference.

One highly promising solution to cross-lingual variation lies in linguistic typology. Linguistic typology provides a systematic, empirical comparison of the world’s languages with respect to a variety of linguistic properties. Work on NLP has shown that typological information documented in publicly available databases can provide a rich source of guidance for choice of data, features and algorithm design in multilingual NLP. Just one well-known example is this work that integrates typological information into multilingual models in the form of “selective sharing”, an approach that ties language-specific model parameters according to the typological features of each language.

Although many such approaches have been proposed, typological information has not been fully exploited in NLP yet. Most experiments have been limited to a small number of typological features (mostly related to word order) and tasks (mostly dependency parsing), while many others could be explored. One potential reason for this could be the limited awareness or understanding of typology among the NLP community. However, existing typological databases are also lacking in coverage and their interpretable, absolute, discrete features cannot be integrated straightforwardly into the state-of-art NLP algorithms which are opaque, contextual, and probabilistic. A possible solution to this is the automatic induction of typological information from data. For example, expressions of tense can be extracted from a multi-parallel corpus starting from a pivot and finding equivalents in other languages based on distributional methods. Although this research thread has been flourishing recently, automatically induced typological information has not yet been integrated in NLP algorithms or multilingual NLP on a large scale, which is a promising line for future research.

Our TyP-NLP workshop will be the first dedicated venue for typology-related research and its integration in multilingual NLP. Long due, the workshop is specifically aimed at raising awareness of linguistic typology and its potential in supporting and widening the global reach multilingual NLP. It will foster research and discussion on open problems, not only within the active community working on cross- and multilingual NLP but also inviting input from leading researchers in linguistic typology. The workshop will provide focussed discussion on a range of topics, including (but not limited to) the following:

- Language-independence in training, architecture design, and hyperparameter tuning. Is it possible (and if yes, how) to unravel unknown biases that hinder the cross-lingual performance of NLP algorithms and to leverage the knowledge on such biases in NLP algorithms?

- Integration of typological features in language transfer and joint multilingual learning. In addition to established techniques such as “selective sharing”, are there alternative ways to encoding heterogeneous external knowledge in machine learning algorithms?

- New applications. The application of typology to currently uncharted territories, i.e. the use typological information in NLP tasks where such information has not been investigated yet.

- Automatic inference of typological features. The pros and cons of existing techniques (e.g. heuristics derived from morphosyntactic annotation, propagation from features of other languages, supervised Bayesian and neural models) and discussion on emerging ones.

- Typology and interpretability. The use of typological knowledge for interpretation of hidden representations of multilingual neural models, multilingual data generation and selection, and typological annotation of texts.

- Improvement and completion of typological databases. Combining linguistic knowledge and automatic data-driven methods towards the joint goal of improving the knowledge on cross-linguistic variation and universals.

The workshop will feature several invited speakers from the fields of (multilingual) NLP and linguistic typology (see the list below), focusing on the themes mentioned above. We will also host a panel to bring in different perspectives on the problems shared by the two disciplines. Finally, we will issue a call for abstract submissions and the accepted abstracts will be presented at the workshop, providing new insights and ideas. We plan to make the short abstracts non-archival, in order not to discourage researchers from preferring main conference proceedings, and at the same time to ensure that interesting, exciting, and thought-provoking research is presented at the workshop. In particular, we will solicit 2-page or 4-page abstracts of already published work or work in progress.

In general, we believe that this inter-disciplinary workshop will be a great opportunity to encourage research on a timely area which has not received such dedicated attention before but which is of interest to the large and diverse community of researchers working on multilingual NLP. We expect this workshop to ultimately lead into key methodology for improving the global reach of language technology.

Invited Speakers

Emily M. Bender’s primary research interests are in multilingual grammar engineering, the study of variation, both within and across languages, and the relationship between linguistics and computational linguistics. She is the LSA’s delegate to the ACL. Her 2013 book Linguistic Fundamentals for Natural Language Processing: 100 Essentials from Morphology and Syntax aims to present linguistic concepts in an manner accessible to NLP practitioners.

Jason Eisner works on machine learning, combinatorial algorithms, probabilistic models of linguistic structure, and declarative specification of knowledge and algorithms. His work addresses the question, “How can we appropriately formalize linguistic structure and discover it automatically?”

Balthasar Bickel aims at understanding the diversity of human language with rigorously tested causal models, i.e. at answering the question what’s where why in language. What structures are there, and how exactly do they vary? Engaged in both linguistic fieldwork and statistical modeling, he focuses on explaining universally consistent biases in the diachrony of grammar properties, biases that are independent of local historical events.

Sabine Stoll questions how children can cope with the incredible variation exhibited in the approximately 6000–7000 languages spoken around the world. Her main focus is the interplay of innate biological factors (such as the capacity for pattern recognition and imitation) with idiosyncratic and culturally determined factors (such as for instance type and quantity of input). Her approach is radically empirical, based first and foremost on the quantitative analysis of large corpora that record how children learn diverse languages.

Isabelle Augenstein is a tenure-track assistant professor at the University of Copenhagen, Department of Computer Science since July 2017, affiliated with the Copenhagen NLP group and the Machine Learning Section, and work in the general areas of Statistical Natural Language Processing and Machine Learning. Her main research interests are weakly supervised and low-resource learning with applications including information extraction, machine reading and fact checking.